订阅 wiki

Share wiki

Bookmark

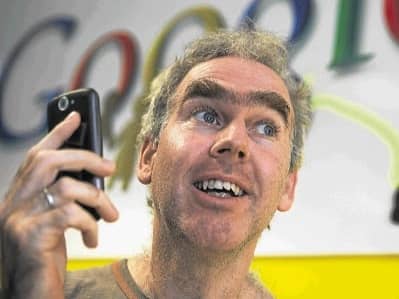

Johan Schalkwyk

0%

Johan Schalkwyk

Johan Schalkwyk 是一位计算机科学家,以其在人工智能领域,特别是在语音技术和大型语言模型方面的工作而闻名。他曾在 Google 担任重要职务,为语音搜索和多模态 AI 等领域做出了贡献,目前担任 Sense 的战略顾问,专注于能源转型的人工智能,并且最近加入了 Meta Superintelligence Labs。 [1] [2] [36]

教育

Johan Schalkwyk 获得了比勒陀利亚大学的机器人工程硕士 (M.Eng.) 学位,专注于强化学习。他于 1993 年完成了该项目,GPA 为 4.0。 [39]

职业生涯

Johan Schalkwyk 在 Google 工作了很长时间,并在 AI 领域被公认为 Google Fellow。他在 Google 的工作涵盖了人工智能和机器学习领域的几个关键领域。作为语音领域的技术负责人,他指导了语音识别和合成技术的战略研究投资。这一领导为创新做出了贡献,例如开发了世界上第一个语音搜索体验 Google 语音搜索,该搜索于 2008 年推出。他还为推进设备端处理以及在各种 Google 产品(包括 Google Assistant 和 YouTube)中应用神经模型等概念发挥了作用,从而将支持范围扩展到 80 多种语言。后来,在 Google DeepMind,他参与了多模态感知和大型语言模型的开发,包括为 Gemini 系列模型做出了贡献。 [1] [2]

2024 年 5 月,Schalkwyk 加入了 Sense 公司,这是一家专门从事家庭和电网嵌入式智能的公司,担任人工智能战略顾问。在这个职位上,他专注于利用人工智能和机器学习来支持全球能源转型。他在 Sense 的咨询工作旨在开发新的工具,供公用事业公司和消费者使用数据和机器学习来管理能源需求、提高效率和增强电网安全性。Sense 利用机器学习为消费者提供有关其家庭能源使用情况的实时见解,并为公用事业公司提供电网智能,用于故障识别、潮流跟踪和电气化规划等任务。 [1] [37] [38]

Meta Superintelligence Labs

2025 年 6 月,马克·扎克伯格宣布创建 Meta Superintelligence Labs (MSL),这是 Meta Platforms 内的一个新组织,专注于开发人工超智能。Johan Schalkwyk 被任命为加入该计划的关键新团队成员之一。MSL 的成立是为了容纳从事基础模型(包括 Llama 软件)、产品和基础人工智能研究项目的各个团队。MSL 的成立以及招募像 Schalkwyk 这样的顶尖 AI 人才,是 Meta 在快速发展的人工智能领域展开竞争的一部分努力。 [40] [41]

出版物

Johan Schalkwyk 共同撰写了许多计算机科学领域的研究论文,重点关注语音识别、自然语言处理和机器学习等领域。他的出版物跨越数十年,并出现在重要的会议和期刊上。

主要出版物包括对以下内容的贡献:

- 2020 – 至今

- Gemini 1.5: Unlocking multimodal understanding across millions of tokens of context. CoRR abs/2403.05530 (2024) [3]

- Coupling Speech Encoders with Downstream Text Models. CoRR abs/2407.17605 (2024) [4]

- SLM: Bridge the Thin Gap Between Speech and Text Foundation Models. ASRU 2023: 1-8 (2023) [5]

- Lego-Features: Exporting Modular Encoder Features for Streaming and Deliberation ASR. ICASSP 2023: 1-5 (2023) [6]

- Google USM: Scaling Automatic Speech Recognition Beyond 100 Languages. CoRR abs/2303.01037 (2023) [7]

- AudioPaLM: A Large Language Model That Can Speak and Listen. CoRR abs/2306.12925 (2023) [8]

- Gemini: A Family of Highly Capable Multimodal Models. CoRR abs/2312.11805 (2023) [9]

- 2010 – 2019

- On lattice generation for large vocabulary speech recognition. ASRU 2017: 228-235 (2017) [10]

- Speech Research at Google to Enable Universal Speech Interfaces. New Era for Robust Speech Recognition, Exploiting Deep Learning 2017: 385-399 (2017) [11]

- Long short term memory neural network for keyboard gesture decoding. ICASSP 2015: 2076-2080 (2015) [12]

- Learning acoustic frame labeling for speech recognition with recurrent neural networks. ICASSP 2015: 4280-4284 (2015) [13]

- Voice Query Refinement. INTERSPEECH 2012: 2462-2465 (2012) [14]

- A Filter-Based Algorithm for Efficient Composition of Finite-State Transducers. Int. J. Found. Comput. Sci. 22(8): 1781-1795 (2011) [15]

- Voice search for development. INTERSPEECH 2010: 282-285 (2010) [16]

- On-demand language model interpolation for mobile speech input. INTERSPEECH 2010: 1812-1815 (2010) [17]

- Query language modeling for voice search. SLT 2010: 127-132 (2010) [18]

- Filters for Efficient Composition of Weighted Finite-State Transducers. CIAA 2010: 28-38 (2010) [19]

- 2000 – 2009

- OpenFst. FSMNLP 2009: 47 (2009) [20]

- Mobile media search. ICASSP 2009: 4897-4900 (2009) [21]

- Language modeling for what-with-where on GOOG-411. INTERSPEECH 2009: 991-994 (2009) [22]

- A generalized composition algorithm for weighted finite-state transducers. INTERSPEECH 2009: 1203-1206 (2009) [23]

- Semantic context effects in the recognition of acoustically unreduced and reduced words. INTERSPEECH 2009: 1867-1870 (2009) [24]

- Deploying GOOG-411: Early lessons in data, measurement, and testing. ICASSP 2008: 5260-5263 (2008) [25]

- OpenFst: A General and Efficient Weighted Finite-State Transducer Library. CIAA 2007: 11-23 (2007) [26]

- Speech recognition with dynamic grammars using finite-state transducers. INTERSPEECH 2003: 1969-1972 (2003) [27]

- 1990 – 1999

- Universal speech tools: the CSLU toolkit. ICSLP 1998 (1998) [28]

- Experiments with a spoken dialogue system for taking the US census. Speech Commun. 23(3): 243-260 (1997) [29]

- CSLUsh: an extendible research environment. EUROSPEECH 1997: 689-692 (1997) [30]

- Speaker verification with low storage requirements. ICASSP 1996: 693-696 (1996) [31]

- Building 10, 000 spoken dialogue systems. ICSLP 1996: 709-712 (1996) [32]

- Speech recognition using syllable-like units. ICSLP 1996: 1117-1120 (1996) [33]

- Detecting an imposter in telephone speech. ICASSP (1) 1994: 169-172 (1994) [34]

- A prototype voice-response questionnaire for the u.s. census. ICSLP 1994: 683-686 (1994) [35]

他的工作包括对 OpenFst 库的贡献,OpenFst 库是一个用于构建和操作加权有限状态转换器的工具包,该工具包广泛用于语音和语言处理应用程序。 [26] [20]

发现错误了吗?