订阅 wiki

Share wiki

Bookmark

PaLM AI

0%

PaLM AI

PaLM (Pathways 语言模型) 是 Google 在 Pathways 架构下开发的第一个大型语言模型,旨在同时管理多个任务,高效适应新任务,并展示对复杂信息的更全面理解。[2] [12]

概述

PaLM AI 利用 Google 的 Pathways 系统,在 6,144 个 TPU v4 芯片上扩展训练,代表了迄今为止用于语言模型训练的最大的基于 TPU 的配置。训练过程采用跨两个 Cloud TPU Pod 的数据并行和每个 Pod 内的标准模型并行。该模型在广泛的多语言数据集上进行训练,包括网络内容、文学作品、维基百科、对话和源代码,并使用自定义词汇表,旨在保留对自然语言和编程代码都很重要的结构。

该项目采用分阶段去中心化方法,每月将 5% 的开发转移到链上,直到达到目标平衡,大约一半的活动直接在 Ethereum 或 Layer 2 网络(如 SKALE)上运行。该模型于 2022 年 4 月首次发布,并在 2023 年 3 月之前保持私有,当时 Google 推出了 PaLM 和几项相关技术的 API。[1][4] [11]

模型架构和功能

该模型在几个关键领域表现出卓越的性能:

- 常识推理:PaLM 可以理解和回答需要日常知识和逻辑推理的问题

- 算术推理:该模型可以通过逐步推理解决数学问题

- 笑话解释:PaLM 可以分析和解释幽默,展示对文化背景和语言细微差别的理解

- 代码生成:该模型可以生成跨多种编程语言的功能代码

- 翻译:PaLM 支持多种语言之间的高精度翻译 [1] [12]

专门变体

Med-PaLM

Med-PaLM 由 Google 和 DeepMind 联合开发,是 PaLM 540B 的一个版本,已在医疗数据上进行了微调。除了准确回答问题外,Med-PaLM 还可以为其响应提供推理并评估自己的答案,使其在医疗保健环境中特别有价值。[7]

PaLM-E

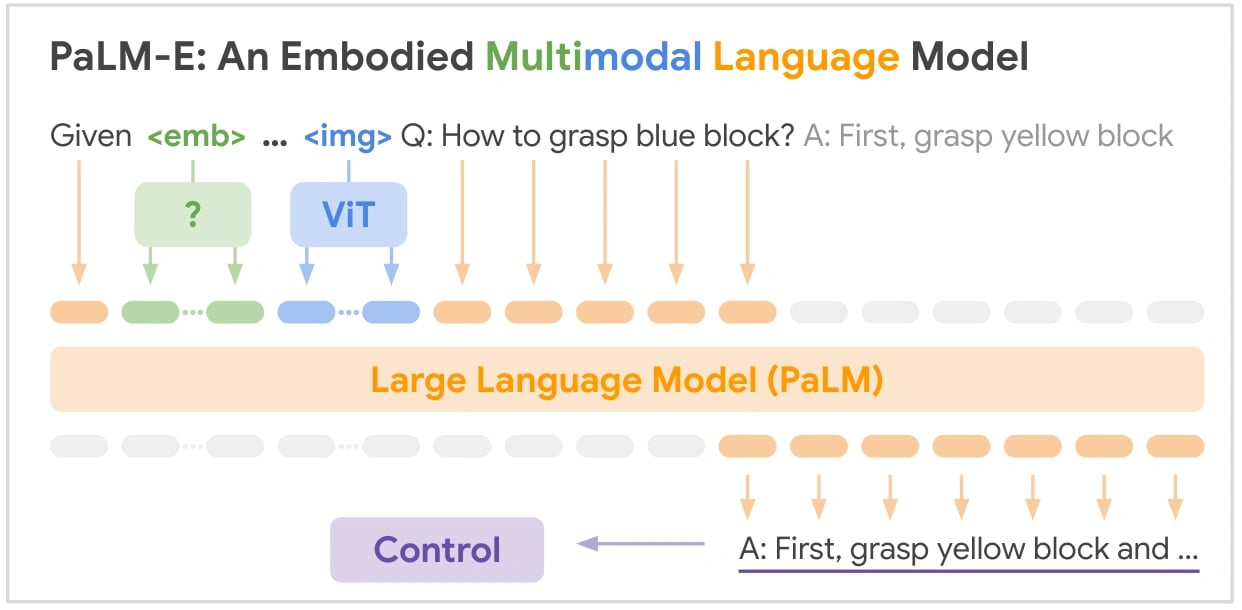

PaLM-E 使用视觉转换器扩展了基础模型,创建了一个用于机器人操作的最先进的视觉语言模型。该模型可以在不需要重新训练或微调的情况下,以具有竞争力的姿态执行机器人任务,展示了底层架构的灵活性。[8]

AudioPaLM

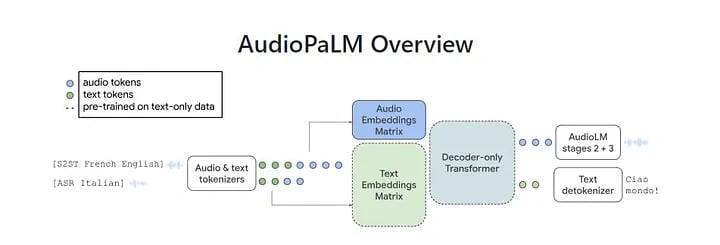

2023 年 6 月,Google 宣布推出用于语音到语音翻译的 AudioPaLM,它使用 PaLM-2 架构和初始化。它是一种多模态语言模型,集成了 PaLM-2(基于文本的模型)和 AudioLM(基于语音的模型),以实现语音理解和生成。这种组合架构允许通过处理和生成口语和书面语言来实现一系列应用,包括语音识别和语音到语音翻译。[9]

演变和后续模型

2023 年 5 月,Google 在年度 Google I/O 主题演讲中宣布 PaLM 2 作为原始 PaLM 模型的继任者。PaLM 2 是一种先进的语言模型,旨在提高多语言理解、推理和编程方面的性能。它在涵盖 100 多种语言的各种多语言语料库上进行训练,使其能够处理复杂的语言任务,如翻译、成语理解和细致的文本生成。该模型的训练还包括科学和数学内容,从而增强了其逻辑和推理能力。此外,PaLM 2 已经在广泛的开源代码上进行了预训练,支持广泛使用的编程语言以及更专业的编程语言。[10]

代币经济学

PaLM AI 代币 ($PALM)

$PALM 代币在以太坊网络上推出,旨在支持正在进行的机器人开发,维持对 Telegram 上最新大型语言模型的访问,并充当生态系统内可交易的流动资产。[5] [6]

$PALM 代币的总供应量上限为 1 亿个。其中,在销毁总供应量的 22.5% 后,仍有 7750 万个代币在流通。在推出时,2000 万个代币 (20%) 被永久从流通中移除,随后又销毁了 250 万个代币 (2.5%)。很大一部分,7500 万个代币 (75%),被分配给流动性以支持交易可访问性。剩余的 5%(500 万个代币)保留用于潜在的未来发展,包括中心化交易所上市。[5]

合作伙伴关系

PaLM AI 已与 NFINITY AI 建立合作伙伴关系,以在创意人工智能领域进行合作。合作的重点是整合两个平台的工具,包括 PaLM AI 的系统和 NFINITY 的 Creator Studio 和 $NFNT 实用程序,这些实用程序利用了生成式 AI 的进步。该合作伙伴关系是在 Token2049 活动期间建立的,反映了对扩展 AI 创意应用的共同关注。[13]

PaLM AI 已与 The Guru Fund 建立合作伙伴关系,以将支持基金的 智能合约 集成到其自动化交易系统中。此合作旨在促进 PaLM 生态系统内的资金池管理。更多详细信息和进展将在即将举行的社区讨论中公开分享。[14]

PaLM AI 已与 GameHub ($GHUB) 合作,GameHub 是一个专注于 Telegram Mini App 生态系统内的 Web3 游戏的平台。合作的重点是将游戏化功能集成到 PaLM 的产品套件中,包括开发边玩边赚 (P2E) 和玩家对玩家 (PvP) 游戏。作为合作伙伴关系的一部分,已推出一款定制游戏,其中包含 $PALM 代币机制,利润的一部分分配给代币销毁,以影响流通供应。[15]

发现错误了吗?