Subscribe to wiki

Share wiki

Bookmark

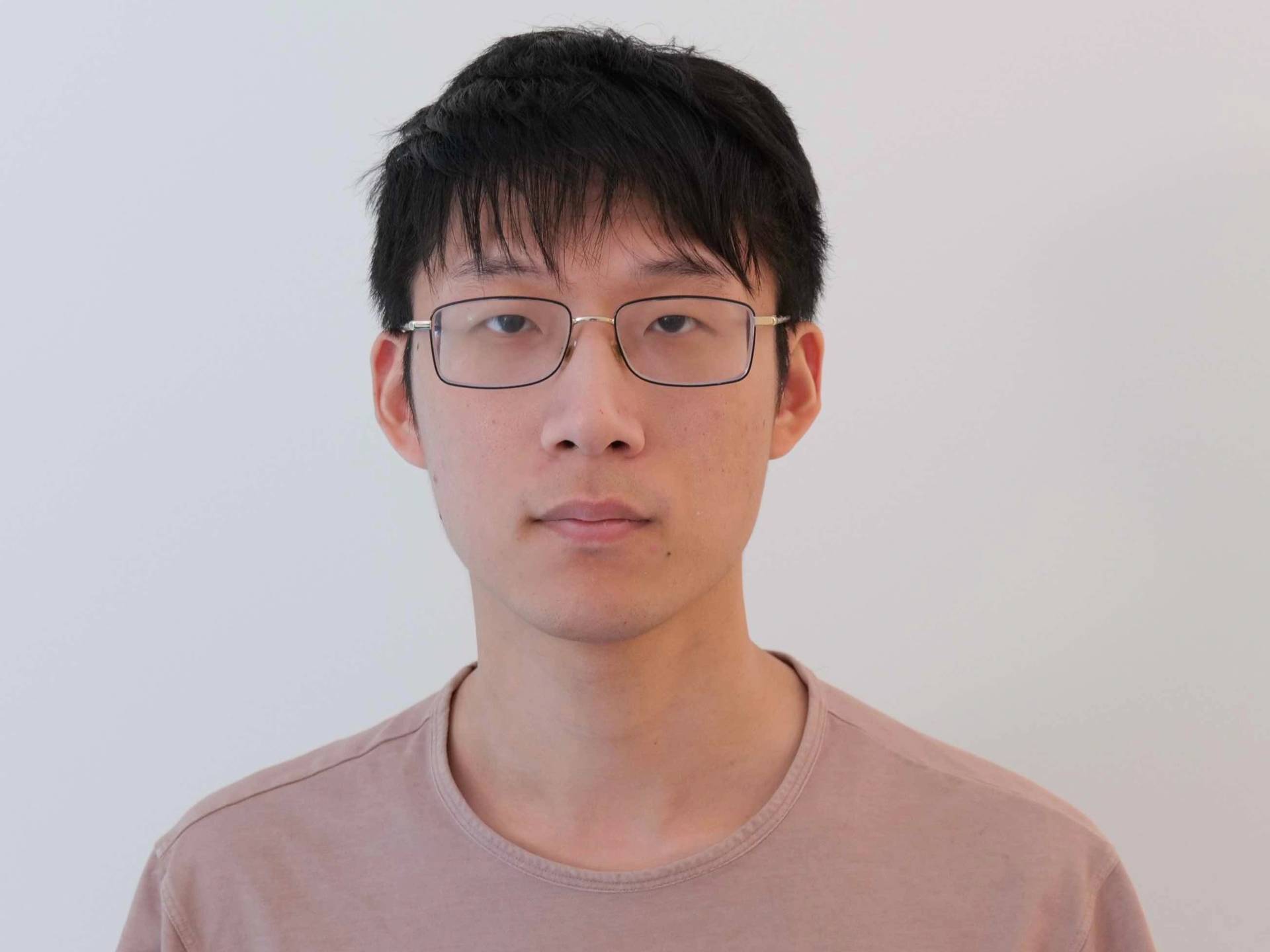

Zhiqing Sun

The Agent Tokenization Platform (ATP):Build autonomous agents with the Agent Development Kit (ADK)

0%

Zhiqing Sun

Zhiqing Sun is an artificial intelligence researcher specializing in large language models (LLMs) and autonomous AI agents. He is currently a researcher at Meta Superintelligence Labs and was previously part of the research team at OpenAI, where he contributed to the development of advanced agentic systems and foundational models. [1]

Education

Sun earned a Ph.D. in Computer Science from the Language Technologies Institute at Carnegie Mellon University. He successfully defended his doctoral thesis on February 28, titled "Scalable Alignment of Large Language Models Towards Truth-Seeking, Complex Reasoning, and Human Values." His doctoral research focused on developing methods to ensure that large-scale AI systems operate in a manner that is truthful, capable of complex logical steps, and aligned with human-defined principles. The work presented in his thesis covered several key areas of AI alignment research. These included Fact-RLHF, a technique that uses reinforcement learning from human feedback to improve the factual accuracy of model outputs; Lean-STaR, a method for enhancing the efficiency of AI reasoning processes; and studies on easy-to-hard generalization, which investigates how AI models trained on simpler tasks can develop the ability to solve more complex, unseen problems. His research also explored self-alignment techniques, where models learn to align themselves with desired values with minimal human supervision, and the creation of instructable reward models, which are designed to be more easily guided and corrected by human operators. [1]

Career

Sun's professional career has been situated at the forefront of applied AI research within major technology companies. He was a researcher at OpenAI, where his work involved training large language models to perform a range of sophisticated tasks. During his tenure, he was a key contributor to projects focused on creating highly autonomous AI agents. These systems are designed to execute complex, multi-step operations and discover new information with a significant degree of independence from human input. His contributions were part of a broader effort at the organization to push the boundaries of what AI can accomplish in practical, real-world scenarios. [1]

In mid-2025, Sun joined Meta Superintelligence Labs (MSL). His transition to Meta occurred during a period of intense competition for top-tier AI talent, often referred to as an "AI arms race," where leading technology firms aggressively recruited experienced researchers to advance their work on artificial general intelligence (AGI). Meta, in particular, engaged in a significant hiring initiative to build out its superintelligence research division, successfully recruiting numerous specialists from competitors, including OpenAI. This strategic effort was aimed at assembling teams with proven track records of collaboration and innovation in the field of advanced AI. [2] [3]

Major Works

At OpenAI, Sun contributed to several high-profile projects aimed at advancing the capabilities of AI agents and foundational models.

ChatGPT Agent

Sun was a member of the team that developed the ChatGPT agent, a system announced in July 2025. He characterized the project as a unified agentic system that combines multiple AI technologies to create a more capable and autonomous assistant. The agent integrates an action-taking remote browser, which allows it to navigate and interact with websites; the web synthesis capabilities from the "deep research" project, enabling it to gather and consolidate information from the internet; and the established conversational strengths of ChatGPT. The primary goal of this system is to empower the AI to perform complex work on a user's behalf by directly operating a computer. This represents a significant step beyond text-based interaction, allowing the agent to execute tasks such as booking appointments, conducting detailed market research, or managing online accounts. [1]

Deep Research

Sun was also a key contributor to OpenAI's "deep research" project. This initiative was focused on building a highly autonomous agent designed to address long-horizon tasks—complex problems that require numerous steps, planning, and adaptation over an extended period. The project's objective was to provide reasoning models with the necessary tools and autonomy to conduct comprehensive research and potentially uncover novel insights or knowledge without continuous human guidance. By handing the agent a challenging problem, the system is intended to independently formulate a plan, gather information, and work towards a solution, mimicking the process of a human researcher. [1]

BrowseComp

To systematically measure and improve the performance of web-browsing AI agents, Sun was involved in the creation of BrowseComp ("Browsing Competition"). This open-source benchmark, released in April, is designed to rigorously test an AI agent's ability to navigate the internet to find specific, often hard-to-locate, information. Sun likened the benchmark to academic or professional competitions in fields like coding or mathematics, which serve as standardized tests of intelligence and skill. While such benchmarks may not perfectly replicate real-world complexity, they provide a critical framework for evaluating progress, identifying weaknesses, and driving innovation in the development of more competent and reliable browsing agents. [1]

OpenAI o3 Model

Sun's work at OpenAI also included contributions to the o3 model, a system that achieved a state-of-the-art result on the Abstraction and Reasoning Corpus (ARC) benchmark. In December 2024, it was announced that the o3 model scored 75.7% on the ARC-AGI Semi-Private Evaluation, with a more computationally intensive version of the model reaching 87.5%. The ARC benchmark is widely respected in the AI community as a difficult test of a system's abstract reasoning capabilities, which are considered fundamental to artificial general intelligence. The test requires the AI to solve novel logic puzzles it has never seen before, measuring its ability to generalize from a few examples rather than relying on pattern recognition from vast datasets. A high score on ARC is seen as a significant indicator of an AI's progress toward more human-like problem-solving skills. [1]

See something wrong?

The Agent Tokenization Platform (ATP):Build autonomous agents with the Agent Development Kit (ADK)