订阅 wiki

Share wiki

Bookmark

zkLLM

0%

zkLLM

zkLLM(零知识大型语言模型)是一种将零知识证明 (ZKP)与大型语言模型相结合的技术,旨在增强人工智能应用中的隐私性和可扩展性。这种创新方法旨在通过实现安全高效的敏感数据处理,来解决人工智能和区块链领域中的关键挑战。[1][5]

概述

zkLLM,即零知识大型语言模型,代表了密码学和人工智能交叉领域的一项重大进步。它利用零知识证明系统,允许语言模型基于私有输入数据处理和生成输出,而无需泄露该数据的实际内容。这项技术解决了人工智能应用中日益增长的数据隐私和安全问题,尤其是在处理医疗保健、金融和个人通信等敏感信息的领域。[2][3] zkLLM 背后的核心原则是创建一个无需信任的环境,人工智能模型可以在加密数据上运行,提供可验证的结果,而无需暴露底层信息。这种方法不仅增强了隐私性,还为受监管行业中的协作式人工智能开发和部署开辟了新的可能性。[2][3] 在 LLM 的推理过程中使用 ZKP 可以提供真实性和隐私性。它可以确认输出来自特定模型,而无需泄露任何模型细节,从而保护任何敏感或专有信息。生成的证明是可验证的,允许任何人确认输出的真实性。一些 ZKP 协议也具有可扩展性,可以适应大型模型和复杂的计算,这对 LLM 来说是有益的。[3]

技术

零知识证明 (ZKP)

zkLLM 的核心是零知识证明的实现,这是一种密码学方法,允许一方(证明者)向另一方(验证者)证明某个陈述是真实的,而无需泄露除陈述本身有效性之外的任何信息。在 zkLLM 的上下文中,这项技术应用于人工智能计算,从而实现:[5]

- 验证人工智能模型输出,而无需访问输入数据

- 证明模型执行正确,而无需泄露模型参数

- 用于协作式人工智能训练和推理的安全多方计算

zkLLM 背后的两个关键组件是:[6]

- tlookup:一种新的零知识证明协议,旨在处理深度学习模型中普遍存在的非算术运算。它针对并行计算环境进行了优化,并且在内存或运行时中没有增加渐近开销。

- zkAttn:zkAttn 基于 tlookup 构建,专门针对 LLM 中注意力机制的验证。它旨在有效地管理证明开销,同时平衡准确性、运行时和内存使用。

与大型语言模型的集成

zkLLM 调整零知识证明系统以与大型语言模型的复杂架构配合使用。这种集成包括:

- 将模型参数和输入数据编码为与 ZK 电路兼容的格式

- 设计可以处理 LLM 计算规模的高效证明系统

- 为神经网络运算实施可验证的计算技术

虽然 ZK 证明对于证明 zkLLM 中计算的正确性非常重要,但它们本身无法处理这些强大的人工智能模型所需的复杂计算。这就是全同态加密 (FHE) 发挥作用的地方。[6]

全同态加密 (FHE)

全同态加密是一种密码学技术,通过使用该技术可以直接对加密数据执行计算。FHE 方法确保了整个计算过程中数据的安全性。这项技术有可能彻底改变安全计算领域。可以将数据放入其中并执行计算,而无需打开盒子。FHE 允许 zkLLM 对加密的用户数据进行计算或操作。LLM 可以对加密数据本身执行情感分析或文本生成等复杂任务,而无需对其进行解密。在保护隐私方面,zkP 和 FHE 携手合作,朝着同一目标努力。[6]

zkP 和 FHE

零知识证明 (zkP) 和全同态加密 (FHE) 是两种强大的工具,当一起使用时,可以创建一个保护隐私的强大工具。zkP 允许一方(证明者)向另一方(验证者)证明某个陈述的真实性,而无需泄露有关该陈述本身的任何其他信息。另一方面,FHE 允许直接执行计算,而无需解密数据。这在关注隐私的情况下非常有用。ZK 证明和 FHE 构成了 zkLLM 的支柱。以下是它们如何协同工作。[6]

- FHE 加密用户数据。这确保了数据在整个过程中保持安全。

- 用户要求 LLM 对加密数据执行计算。zkLLM 旨在与 FHE 高效地配合使用以进行这些计算。

- ZK 证明证明所请求的查询/计算已正确解决。LLM 证明它已按照指示处理数据,而无需泄露数据或中间步骤。

主要特点

- 保护隐私的推理:允许用户查询人工智能模型,而无需暴露其输入数据

- 可验证的人工智能:提供正确模型执行和输出生成的密码学证明

- 可扩展的架构:旨在处理大型语言模型的计算要求

- 互操作性:与各种区块链网络和人工智能框架兼容

应用

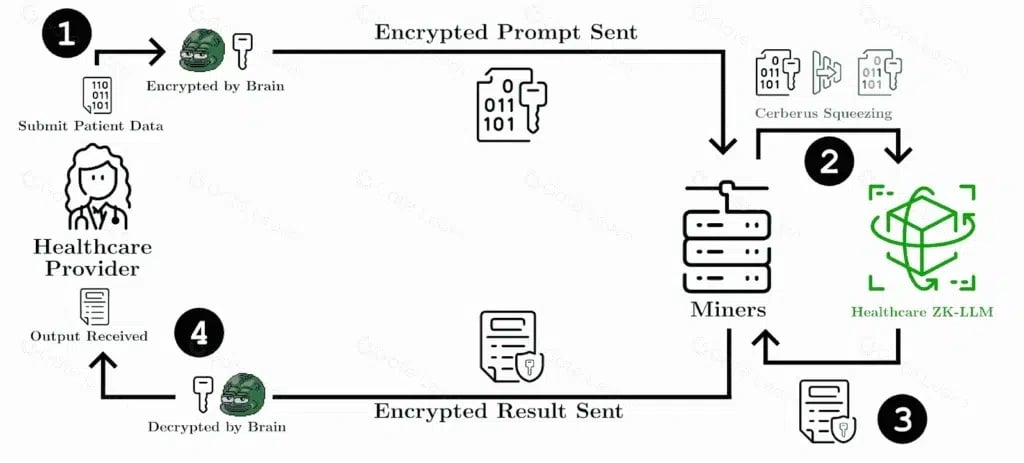

- 医疗保健:zkLLM 在医疗保健行业中非常有用。假设患者将其医疗记录上传到基于云的人工智能系统。zkLLM 可以分析数据,同时保持敏感的患者信息仍然加密,并且仍然可以识别任何潜在的健康问题。这保护了患者的隐私,同时允许使用先进的人工智能驱动的诊断。

- 安全处理患者数据,以进行诊断和治疗建议

- 对敏感医疗信息进行协作研究

- 金融:zkLLM 的主要用途之一可能是分析用户加密的财务数据。银行对账单和投资组合中的数据可以由 LLM 扫描,并要求根据该数据提供财务建议。LLM 可以识别投资机会,而无需解密财务信息。

- 保护隐私的信用评分和风险评估

- 安全分析金融交易以进行欺诈检测

- 法律

- 机密文档分析和合同审查

- 安全电子取证流程

- 个人助理

- 语音助手和聊天机器人的私有查询处理

- 在人工智能交互中安全处理个人信息

- 去中心化人工智能

- 区块链网络上的无需信任的人工智能计算

- 保护隐私的联邦学习系统

- 安全聊天机器人和虚拟助手 zkLLM 还可以用于为聊天机器人和虚拟助手提供支持。这些机器人可以在几秒钟内解决用户的查询。

- 私有内容审核 zkLLM 的另一个重要应用可能是分析、识别和删除互联网上的有害或不当内容。大型语言模型对加密的聊天记录进行操作,以识别任何违规或不当数据。另一方面,ZK 证明可用于证明聊天记录已正确扫描。

开发和当前状态

zkLLM 的开发是一个持续的过程,有多个研究团队和公司致力于实施和完善该技术。主要里程碑包括:

- 在关于保护隐私的机器学习的学术论文中建立了理论基础

- 概念验证实施,证明了较小神经网络的可行性

- 正在进行的研究,以优化用于大规模人工智能计算的零知识证明系统

虽然 zkLLM 显示出巨大的前景,但重要的是要注意该技术仍处于早期阶段。在扩展该方法以处理最先进的大型语言模型的全部复杂性,同时保持实际效率方面仍然存在挑战。

潜在影响

zkLLM 的成功实施可能会对人工智能和区块链行业产生深远的影响:

- 增强数据隐私:在高度监管的行业中启用人工智能应用

- 提高对人工智能系统的信任:提供正确模型行为的可验证证明

- 去中心化的人工智能基础设施:促进安全、分布式的人工智能计算

- 新的商业模式:在不暴露专有数据或算法的情况下,实现人工智能模型的货币化

未来方向

zkLLM 的研究和开发侧重于几个关键领域:

- 提高神经网络计算的零知识证明生成的效率

- 为 zkLLM 操作开发专用硬件加速器

- 创建用户友好的工具和框架,用于在现有的人工智能管道中实施 zkLLM

- 探索将 zkLLM 与其他隐私增强技术相结合的混合方法

随着该领域的进展,密码学家、人工智能研究人员和区块链开发人员之间的合作对于实现 zkLLM 技术的全部潜力至关重要。

挑战和局限性

尽管 zkLLM 具有潜力,但它面临着几个挑战:

- 计算开销:零知识证明的计算量可能很大,可能会影响实时性能

- 复杂性:将 ZK 系统与 LLM 集成需要复杂的密码学和人工智能专业知识

- 标准化:缺乏针对 zkLLM 实施和验证的既定标准

- 采用障碍:需要对现有的人工智能基础设施和工作流程进行重大更改

发现错误了吗?